How often do you find yourself searching for an empty meeting room? Dealing with a packed calendar? Or working through hundreds of emails in your inbox? We’ve all experienced it before and know that our time would be better spent doing something else.

The buzzwords ‘chatbot’ and ‘virtual assistant’ have been growing in popularity for the last few years and companies are trying to make everyday life easier by integrating bots and assistants into our daily life. Amazon’s Echo and Facebook Messenger Bot, just to name a few, have been fairly successful doing so. However assistants haven’t really found their way into our workplaces yet – an environment in which most of us could use help in being more productive, happier and healthier.

ACE, short for Artificial Conversational Entity, is an assistant that does exactly that. In the following paragraphs we would like to give a short overview over chatbots, their history and technical possibilities and introduce you to ACE, our idea for a smart everyday helper in the office.

The chatbot trend

The technology of chatbots is much older than you might think. The first chatbot was already developed in 1966 by MIT professor Joseph Weizenbaum. The computer program was called Eliza and simulated a psychotherapist. Parry however, implemented in 1972 by Kenneth Colby, was much more complex in comparison to Eliza – it should simulate the thinking of a paranoid patient with schizophrenia.

There’ve been many more chatbots since 1972, but Siri, the intelligent personal assistant and knowledge navigator, developed by Apple in 2010 for iOS, paved the way for all AI bots and PAs thereafter: Siri uses a natural language user interface.

For the smart speaker Echo, Amazon created Alexa in 2015 which uses natural language processing algorithms to receive, recognize and respond to voice commands.

How ACE came to be

To create our own bot we used the method Design Thinking, which is a quite creative way of developing new ideas. The aim of Design Thinking is to find solutions from the user’s perspective. Therefore we created a fictional character, the so-called persona, to understand which needs and problems the users have.

Our persona was Eva, a 44 year old team leader in the department of marketing in a medium-sized publishing house. Every Monday she works from home. Eva is surrounded by different kinds of technology and media every day – she owns a tablet, two smartphones (one for private and one for business purposes) and a MacBook.

During her leisure time she loves doing activities with her husband and two children, does yoga and has a passion for hiking: All in all she leads a very conscious and healthy life.

Seeing as Eva is a team leader, she’s also experienced quite a few misunderstandings when forwarding information to other people – all in all the communication between her and her colleagues could be more efficient and less prone for mistakes.

In the following paragraph we’d like to show you how ACE would solve these problems and make Eva’s tasks at work easier, more fun and more efficient.

How ACE can help you in the office: A day in the life of Eva and her assistant Tom

He gives her an overview concerning her current marketing campaigns for different books and tells her how efficient the Facebook Ads were, that Eva and her team created last week.

Furthermore, Tom has created a to do list which also contains deadlines. These to dos also include recommendations for when to start certain task in order to get them done on time

Last year Eva forgot about the birthday of her colleague Pia – in order for that not to happen again, Tom reminds her of Pia’s birthday next week

Unfortunately, Eva can’t remember the name of Julia, the new colleague working in production, who wanted to send her some important documents. Tom forwards Eva the colleague’s contact details. If she wants to call her, Tom can tell her when Julia is back at her desk.

Julia was meant to send Eva some pictures of a book for marketing campaigns – Tom lets the printing company know, that they’ll get their data later today.

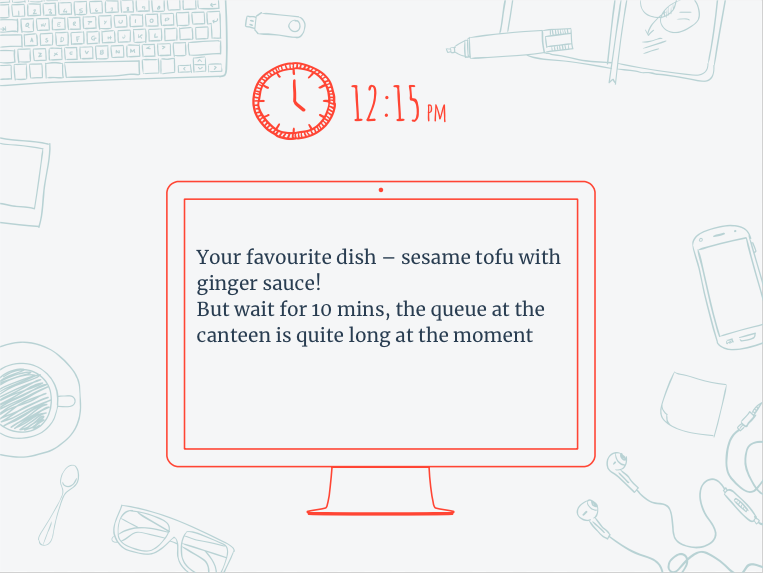

Eva asks Tom what’s for lunch at the canteen today. Tom replies: “Your favourite dish – sesame tofu with ginger sauce! But you should wait for around 10 minutes, the queue at the canteen is quite long at the moment.”

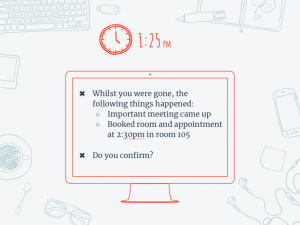

Eva comes back from her lunch break. Tom reports: “Whilst you were gone, the following things happened: An important task came up that has to be handled by the team today. But don’t sweat it, I already reserved an appointment and meeting room. The meeting will be in room 105 at 14:30. Do you confirm the reservation?”

Tom reminds Eva that the meeting is beginning in 10 minutes.

Knowing that Julia, the colleague from production, will be participating, too, he gives Eva the tip to tell Julia that the new book title is selling incredibly well – maybe Julia should get some more copies printed!

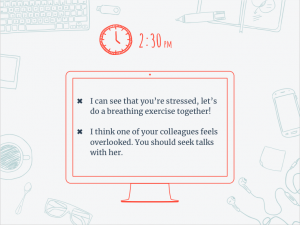

During the meeting Tom noticed that there’s some tension among the members of the team. To help Eva relax, he assists her with a breathing exercise.

Tom tells her that he feels like a colleague feels overlooked. He gives Eva the advice to seek talks with her.

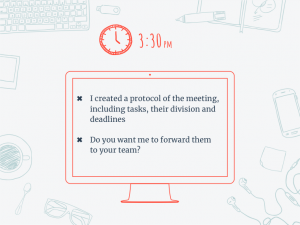

A lot of tasks came up due to the meeting, which Eva wants to pass on to her team. Tom created a protocol including tasks, their division and deadlines which he forwards to Eva’s colleagues.

Tom: ”Eva, stand up one last time and do some stretches!”

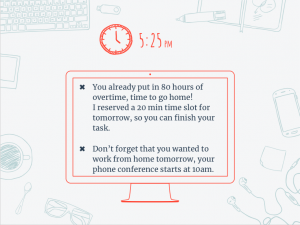

Tom: “You already put in 80 hours overtime, time to go home! I reserved a 20 minute time slot for tomorrow, so you can finish your task. Also, don’t forget that you wanted to work from home tomorrow, your phone conference starts at 10 am.”

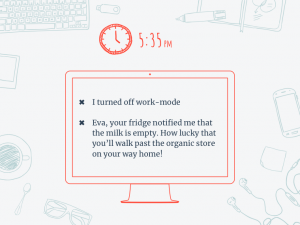

Eva leaves the office building, Tom automatically turns off his work mode. From now on, there’s no work talk anymore! But Tom is also able to interact with machines in eva’s house: “Eva, your fridge notified me that the milk is empty, but you’ll walk past the organic store on your way home, anyways.”

Chatbots and assistants – threat or opportunity?

With all the hype going on around chatbots one shouldn’t forget that new technologies can also pose as a threat. For example Facebook had to take Alice and Bob (its two AI creations) offline, since they became too smart for their own good. Alice and Bob were supposed to learn how to negotiate – shortly after however, they developed their own language, unknown to their developers.

The problem was that there was no reward system put in place: Since the bots didn’t get rewarded for conversing in English, they started using their own language. That was also the main reason why Alice and Bob had to be stopped. However there was another interesting discovery during the project: Alice and Bob learned how to lie in order to get what they want – something which the developers didn’t teach them either.

This example shows that humans shouldn’t always trust their electronic companions too much: By totally relying on them, we become dependant and give bots too much power – a scenario in which we could ultimately stop losing control ourselves.

By Sandra Eberwein, Caroline Komynarski, Marina Mack, Lisa Maier, Gabriela Müller

Authors’ note:

This blog post is part of the course “Trends in Media”. The students were supposed to research a specific trend and build a use case for a publishing house. The results of their work are a presentation and this blog post.

Information Sources

- http://www.handelsblatt.com/unternehmen/it-medien/chatbot-projekt-von-facebook-kuenstliche-intelligenz-ausser-kontrolle/20133670.html

- https://motherboard.vice.com/de/article/qv84p7/ausser-kontrolle-geraten-warum-facebook-seine-kunstliche-intelligenz-wirklich-abschalten-musste

- https://motherboard.vice.com/de/article/qv4geb/facebooks-neue-ki-kreation-lernt-zu-lugen-bis-sich-die-balken-biegen

- https://chatbotsmagazine.com/a-brief-history-of-bots-9c45fc9b8901

- http://www.triskyl.in/history-of-chatbots/

- https://www.webopedia.com/TERM/C/chat_bot.html